What Is GPT-3?

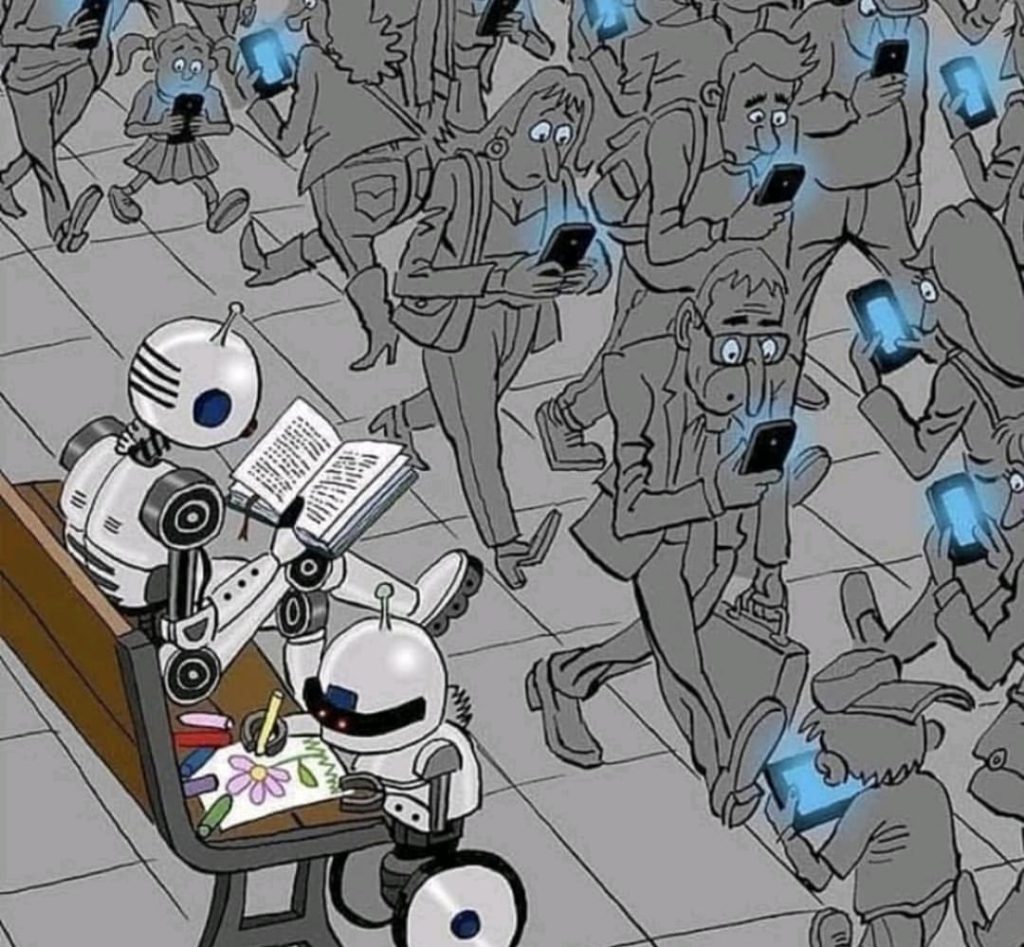

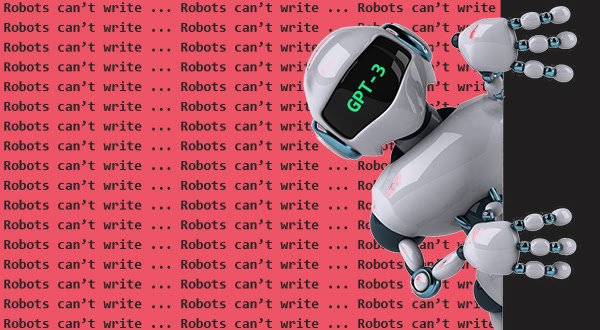

GPT-3 is a computer program created by the privately held San Francisco startup OpenAI. It is a gigantic neural network, and as such, it is part of the deep learning segment of machine learning, which is itself a branch of the field of computer science known as artificial intelligence, or AI. The program is better than any prior program at producing lines of text that sound like they could have been written by a human.

The reason that such a breakthrough could be useful to companies is that it has great potential for automating tasks. GPT-3 can respond to any text that a person types into the computer with a new piece of text that is appropriate to the context. Type a full English sentence into a search box, for example, and you’re more likely to get back some response in full sentences that is relevant. That means GPT-3 can conceivably amplify human effort in a wide variety of situations, from questions and answers for customer service to due diligence document search to report generation.

The program is currently in a private beta for which people can sign up on a waitlist. It’s being offered by OpenAI as an API accessible through the cloud, and companies that have been granted access have developed some intriguing applications that use the generation of text to enhance all kinds of programs, from simple question-answering to producing programming code.

Along with the potential for automation come great drawbacks. GPT-3 is compute-hungry, putting it beyond the use of most companies in any conceivable on-premise fashion. Its generated text can be impressive at first blush, but long compositions tend to become somewhat senseless. And it has great potential for amplifying biases, including racism and sexism.

HOW DOES GPT-3 WORK?

GPT-3 is an example of what’s known as a language model, which is a particular kind of statistical program. In this case, it was created as a neural network.

The name GPT-3 is an acronym that stands for “generative pre-training,” of which this is the third version so far. It’s generative because unlike other neural networks that spit out a numeric score or a yes or no answer, GPT-3 can generate long sequences of original text as its output. It is pre-trained in the sense that is has not been built with any domain knowledge, even though it can complete domain-specific tasks, such as foreign-language translation.

A language model, in the case of GPT-3, is a program that calculates how likely one word is to appear in a text given the other words in the text. That is what is known as the conditional probability of words.

When the neural network is being developed, called the training phase, GPT-3 is fed millions and millions of samples of text and it converts words into what are called vectors, numeric representations. That is a form of data compression. The program then tries to unpack this compressed text back into a valid sentence. The task of compressing and decompressing develops the program’s accuracy in calculating the conditional probability of words.

Once the model has been trained, meaning, its calculations of conditional probability across billions of words are made as accurate as possible, then it can predict what words come next when it is prompted by a person typing an initial word or words. That action of prediction is known in machine learning as inference.

That leads to a striking mirror effect. Not only do likely words emerge, but the texture and rhythm of a genre or the form of a written task, such as question-answer sets, is reproduced. So, for example, GPT-3 can be fed some names of famous poets and samples of their work, then the name of another poet and just a title of an imaginary poem, and GPT-3 will produce a new poem in a way that is consistent with the rhythm and syntax of the poet whose name has been prompted.

Generating a response means GPT-3 can go way beyond simply producing writing. It can perform on all kinds of tests including tests of reasoning that involve a natural-language response. If, for example, GPT-3 is input an essay about rental rates of Manhattan rental properties, and a statement summarizing the text, such as “Manhattan comes cheap,” and the question “true or false?”, GPT-3 will respond to that entire prompt by returning the word “false,” as the statement doesn’t agree with the argument of the essay.

GPT-3’s ability to respond in a way consistent with an example task, including forms to which it was never exposed before, makes it what is called a “few-shot” language model. Instead of being extensively tuned, or “trained,” as it’s called, on a given task, GPT-3 has so much information already about the many ways that words combine that it can be given only a handful of examples of a task, what’s called a fine-tuning step, and it gains the ability to also perform that new task.

The ability to mirror natural language styles and to score relatively high on language-based tests can give the impression that GPT-3 is approaching a kind of human-like facility with language. As we’ll see, that’s not the case.

Subscribe Launch Trend for more NEWS updates everyday

WHAT CAN GPT-3 DO?

OpenAI has now become as famous — or infamous — for the release practices of its code as for the code itself. When the company unveiled GPT-2, the predecessor, on Valentine’s Day of 2019, it initially would not release to the public the most-capable version, saying it was too dangerous to release into the wild because of the risk of mass-production of false and misleading text. OpenAI has subsequently made it available for download.

This time around, OpenAI is not providing any downloads. Instead, it has turned on a cloud-based API endpoint, making GPT-3 an as-a-service offering. (Think of it as LMaaS, language-model-as-a-service.) The reason, claims OpenAI, is both to limit GPT-3’s use by bad actors and to make money.

“There is no ‘undo button’ with open source,” OpenAI told ZDNet through a spokesperson.

“Releasing GPT-3 via an API allows us to safely control its usage and roll back access if needed.”

At present, the OpenAI API service is limited to approved parties; there is a waitlist one can join to gain access.

“Right now, the API is in a controlled beta with a small number of developers who submit an idea for something they’d like to bring to production using the API,” OpenAI told ZDNet.

✍️ #GPT3 explained in under 3 minutes. ⬇️https://t.co/uB1wsdLpjm

— Narrativa (@NarrativaAI) April 15, 2021

More Stories

International Day Of Human Space Flight: Everything You Need To Know

10 Free Online Technical Courses You Can Do From Harvard University

Redmi Note 10 Series India Launch: Here Is Everything You Want To Know